Agent Operator

Agent v1.3.2 [ September 2, 2025 ]

- Multi-turn tool calling with Toolbudget parameter

- Parallel tool execution capability

- Turn table system for conversation event capture

- Chain ID tracking for multi-turn conversations

- Reasoning model support for thinking models with reasoning level par

- Added Anthropic model support

- Enhanced streaming tool detection across providers

- Improved tool call deduplication and history management

- Better error handling and logging levels

- Force option for tool choice and auto-generated chain IDs

- Enhanced tool loading robustness for backward compatibility

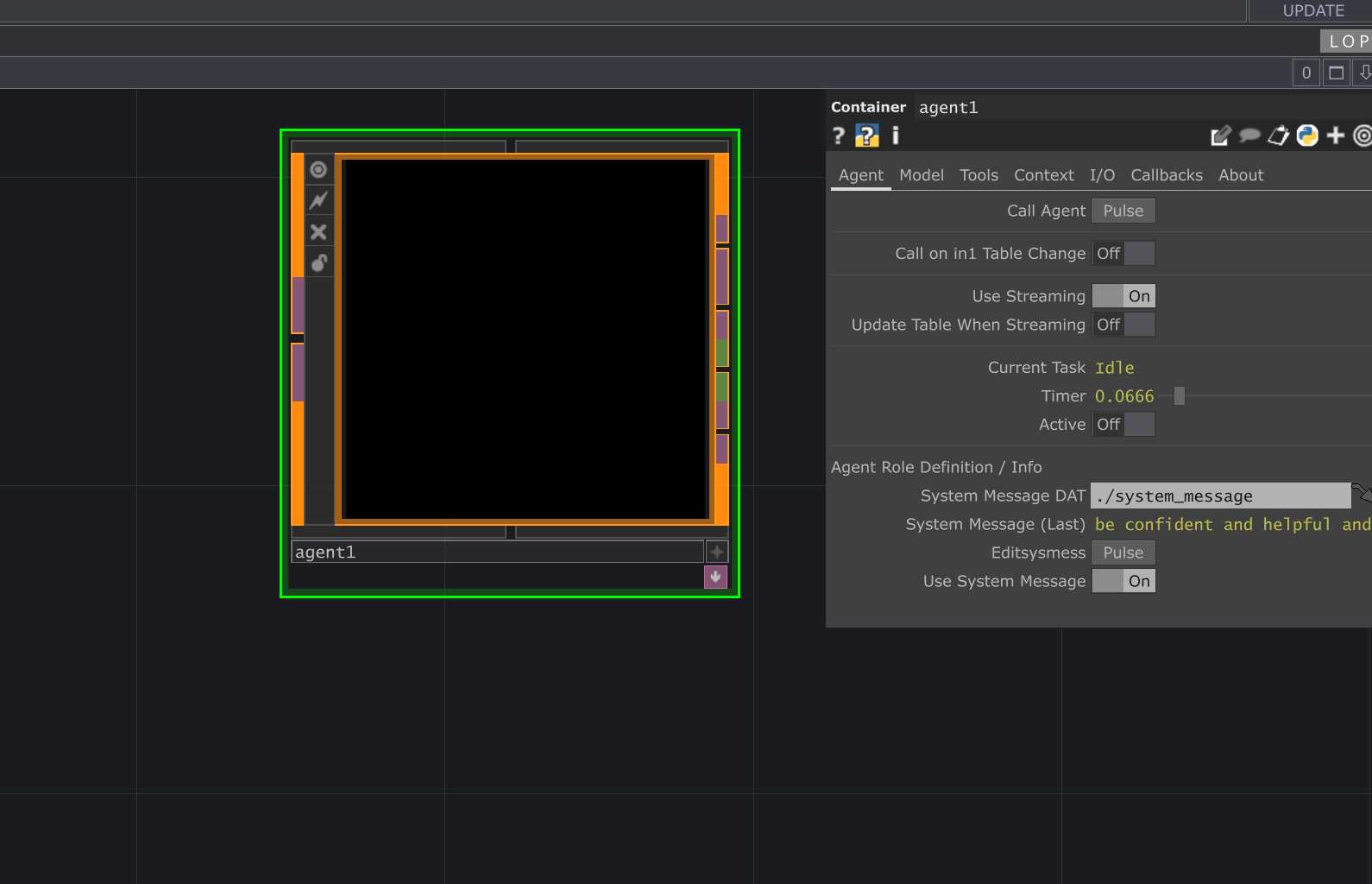

The Agent operator is the central component for managing interactions with Large Language Models (LLMs). It handles assembling prompts, sending requests (including images and audio), processing responses, managing conversation history, executing tools, and handling callbacks.

Key Features

Section titled “Key Features”- Connect directly to multiple AI providers through LiteLLM integration

- Send and receive text, images, and audio (with provider support)

- Control LLM parameters (temperature, max tokens, etc.)

- Access advanced features like streaming responses

- Configurable output formats (conversation, table, parameters)

- Enable dynamic AI tools for parameter control and custom functionality

- Grab contextual information from other operators

- Callback system for integrating with other components

Parameters

Section titled “Parameters”Page: Agent

Section titled “Page: Agent”op('agent').par.Call Pulse Pulse this parameter to initiate a call to the language model using the current settings.

- Default:

False

op('agent').par.Onin1 Toggle When enabled, the Agent will automatically call the LLM whenever the input table changes.

- Default:

False

op('agent').par.Streaming Toggle When enabled, responses are delivered in chunks as they are generated.

- Default:

False

op('agent').par.Streamingupdatetable Toggle When enabled, conversation table is updated as streaming chunks arrive.

- Default:

False

op('agent').par.Taskcurrent Str Displays the current state of the Agent. Read-only parameter updated by the system.

- Default:

"" (Empty String)

op('agent').par.Timer Float Displays timing information for the last LLM call.

- Default:

0.0

op('agent').par.Active Toggle Indicates if the Agent is currently processing a request. Read-only parameter.

- Default:

False

op('agent').par.Cancelcall Pulse Pulse to cancel any currently active API call or tool execution.

- Default:

False

op('agent').par.Systemmessagedat OP The DAT containing the system message text to send to the LLM. This defines the agent's role/persona.

- Default:

./system_message

op('agent').par.Displaysysmess Str Displays the last system message that was sent. Read-only parameter.

- Default:

"" (Empty String)

op('agent').par.Editsysmess Pulse Pulse to open the system message DAT for editing.

- Default:

False

op('agent').par.Usesystemmessage Toggle When disabled, system messages are not sent to the model (for models that do not support system messages).

- Default:

False

op('agent').par.Chainid Str Chain ID for tracking calls in orchestration systems. When set, this will be used instead of auto-generating one.

- Default:

"" (Empty String)

Page: Model

Section titled “Page: Model”Understanding Model Selection

Operators utilizing LLMs (LOPs) offer flexible ways to configure the AI model used:

- ChatTD Model (Default): By default, LOPs inherit model settings (API Server and Model) from the central

ChatTDcomponent. You can configureChatTDvia the "Controls" section in the Operator Create Dialog or its parameter page. - Custom Model: Select this option in "Use Model From" to override the

ChatTDsettings and specify theAPI ServerandAI Modeldirectly within this operator. - Controller Model: Choose this to have the LOP inherit its

API ServerandAI Modelparameters from another operator (like a different Agent or any LOP with model parameters) specified in theController [ Model ]parameter. This allows centralizing model control.

The Search toggle filters the AI Model dropdown based on keywords entered in Model Search. The Show Model Info toggle (if available) displays detailed information about the selected model directly in the operator's viewer, including cost and token limits.

Available LLM Models + Providers Resources

The following links point to API key pages or documentation for the supported providers. For a complete and up-to-date list, see the LiteLLM provider docs.

op('agent').par.Maxtokens Int The maximum number of tokens the model should generate.

- Default:

256

op('agent').par.Temperature Float Controls randomness in the response. Lower values are more deterministic.

- Default:

0

op('agent').par.Modelcontroller OP Operator providing model settings when 'Use Model From' is set to controller_model.

- Default:

None

op('agent').par.Search Toggle Enable dynamic model search based on a pattern.

- Default:

off- Options:

- off, on

op('agent').par.Modelsearch Str Pattern to filter models when Search is enabled.

- Default:

"" (Empty String)

op('agent').par.Showmodelinfo Toggle Displays detailed information about the selected model in the operator's viewer.

- Default:

1- Options:

- off, on

Page: Tools

Section titled “Page: Tools”op('agent').par.Usetools Toggle When enabled, the Agent can use tools defined below during its interactions.

- Default:

False

op('agent').par.Toolfollowup Toggle When enabled, the agent makes a follow-up API call after tool execution to generate a final response. When disabled, the agent only executes tools without generating responses.

- Default:

True

op('agent').par.Toolturnbudget Int Maximum number of tool turns the agent may take (initial tool turn counts as 1). Only applies when Allow Follow-up Tools is enabled.

- Default:

1

op('agent').par.Paralleltoolcalls Toggle If enabled and tools are present, request parallel tool calls (LiteLLM parallel_tool_calls). Default Off.

- Default:

False

op('agent').par.Tool Sequence Sequence parameter that controls groups of external tools.

- Default:

0

op('agent').par.Tool0op OP The operator that provides the tool functionality (must have a compatible extension with GetTool method).

- Default:

"" (Empty String)

Page: Context

Section titled “Page: Context”op('agent').par.Contextop OP Optional operator that can provide additional context to include in the prompt (must have GrabOpContextEXT extension).

- Default:

"" (Empty String)

op('agent').par.Useaudio Toggle When enabled, the specified audio file will be included in the prompt (for providers supporting audio input).

- Default:

False

op('agent').par.Audiofile File Path to the audio file to include in the prompt.

- Default:

"" (Empty String)

op('agent').par.Sendtopimage Toggle If enabled, send the TOP specified in Topimage directly with the prompt.

- Default:

False

op('agent').par.Topimage TOP Specify a TOP operator to send as an image.

- Default:

"" (Empty String)

Page: I/O

Section titled “Page: I/O”op('agent').par.Enablepromptcaching Toggle Enable prompt caching for supported providers to reduce costs and improve performance.

- Default:

False

op('agent').par.Jsonmode Toggle When enabled, the agent will format the response as JSON.

- Default:

False

op('agent').par.Thinkingreplace Str The text to replace the 'thinking' text with.

- Default:

"" (Empty String)

op('agent').par.Thinkingphrases Str The start and end phrases that denote 'thinking' text.

- Default:

<think>,</think>

op('agent').par.Displaytext Toggle Show/hide the text display in the viewer.

- Default:

False

op('agent').par.Tableview Toggle Show/hide the table view in the viewer.

- Default:

False

op('agent').par.Showmetadata Toggle Show/hide the metadata table in the viewer.

- Default:

False

Page: Callbacks

Section titled “Page: Callbacks”op('agent').par.Callbackdat DAT The DAT containing callback functions that respond to Agent events.

- Default:

ChatTD_callbacks

op('agent').par.Editcallbacksscript Pulse Pulse to open the callback DAT for editing.

- Default:

False

op('agent').par.Createpulse Pulse Pulse to create a new callback DAT if one doesn't exist.

- Default:

False

op('agent').par.Ontaskstart Toggle Enable/disable the onTaskStart callback, triggered when a new request begins.

- Default:

False

op('agent').par.Ontaskcomplete Toggle Enable/disable the onTaskComplete callback, triggered when a request completes successfully.

- Default:

False

op('agent').par.Ontoolcall Toggle Enable/disable the onToolCall callback, triggered when a tool is called.

- Default:

False

op('agent').par.Ontaskerror Toggle Enable/disable the onTaskError callback, triggered when a request encounters an error.

- Default:

False

Page: About

Section titled “Page: About”op('agent').par.Chattd OP Reference to the ChatTD operator for configuration.

- Default:

"" (Empty String)

op('agent').par.Showbuiltin Toggle Show built-in TouchDesigner parameters.

- Default:

False

op('agent').par.Version Str Current version of the operator.

- Default:

"" (Empty String)

op('agent').par.Lastupdated Str Date of last update.

- Default:

"" (Empty String)

op('agent').par.Website Str Related website or documentation.

- Default:

"" (Empty String)

op('agent').par.Creator Str Operator creator.

- Default:

"" (Empty String)

op('agent').par.Clearlog Pulse Pulse to clear the logs.

- Default:

False

op('agent').par.Bypass Toggle Bypass the operator.

- Default:

False

Callbacks

Section titled “Callbacks”The Agent operator provides several callbacks that allow you to react to different stages of its operation. Define corresponding functions in the DAT specified by the Callbackdat parameter.

onTaskStartonTaskCompleteonTaskErroronToolCall

# Callback methods

def onTaskStart(info):

"""Called when a new task begins processing."""

# Access information about the task, model, etc.

# Example: model = info.get('model')

pass

def onTaskComplete(info):

"""Called when a task is finished processing."""

# Access the response, tokens used, etc.

# Example: response = info.get('response')

pass

def onTaskError(info):

"""Called when a task encounters an error."""

# Access error details

# Example: error = info.get('error')

pass

def onToolCall(info):

"""Called when a tool is called by the agent."""

# Access the tool call information

# Example: tool_calls = info.get('tool_calls')

passUsage Examples

Section titled “Usage Examples”Basic LLM Conversation

Section titled “Basic LLM Conversation”- Create an

agentoperator. - Create a

tableDATand connect it to the first input of theagent. - In the

tableDAT, add a row with the role “user” and your message in the “message” column. - Pulse the “Call Agent” parameter on the “Agent” page of the

agentoperator. - The agent’s response will be added to the

conversation_dattable inside theagentoperator.

Using with Context Grabber

Section titled “Using with Context Grabber”- Create a

context_grabberoperator. - Connect the

context_grabberto theContext Opparameter on the “Context” page of theagentoperator. - Configure the

context_grabberto grab the desired context (e.g., an image from aTOPoperator). - In your input table, ask the agent a question about the context (e.g., “Describe the image.”).

- Pulse the “Call Agent” parameter. The agent will use the context provided by the

context_grabberto answer your question.

Using Tools

Section titled “Using Tools”- Enable “Use LOP Tools” on the “Tools” page of the

agentoperator. - Connect a tool operator (e.g., a

tool_datwith a Python script) to the “External Op Tools” parameter. - In your input table, ask the agent to perform a task that requires the tool.

- Pulse the “Call Agent” parameter. The agent will execute the tool and use the result to respond.

Cancelling a Call

Section titled “Cancelling a Call”If an API call is taking too long or was initiated by mistake, you can cancel it by pulsing the Cancel Current parameter on the “Agent” page. This will stop the current task and reset the agent’s status to “Idle”.

Filtering Thinking Tags

Section titled “Filtering Thinking Tags”Some models may output “thinking” tags (e.g., <think>...</think>) that you don’t want to display to the user. The Thinking Filter Mode parameter on the “I/O” page can be used to remove these tags from the conversation history, the final output, or both.

- Set the

Thinking Filter Modeto the desired level of filtering. - If your model uses different tags, you can specify them in the

Thinking Phrasesparameter. - You can also provide replacement text in the

Thinking Replacement Textparameter.