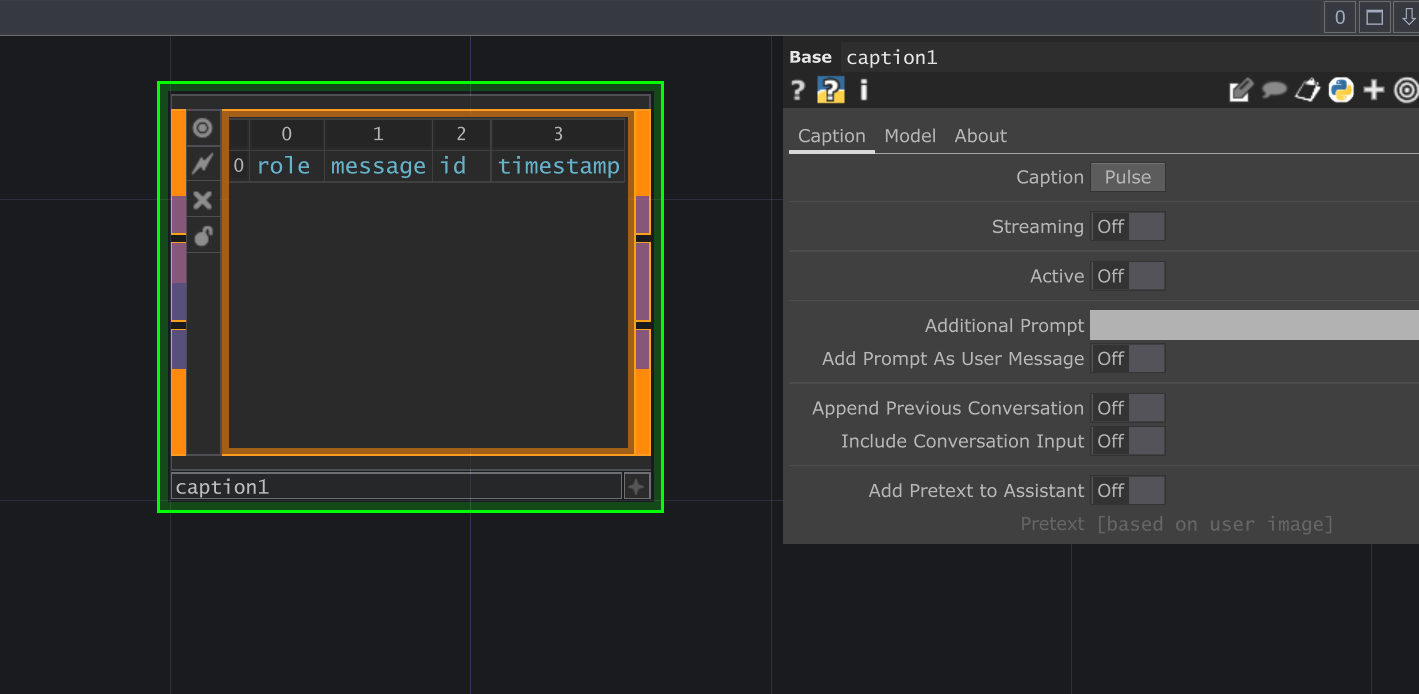

Caption

The Caption LOP allows you to generate text descriptions (captions) for images using large language models (LLMs). It takes an image TOP and optional conversation history DAT as input, sends the image and prompt to a configured LLM, and outputs the updated conversation and the generated caption separately.

Inputs/Outputs

Section titled “Inputs/Outputs”- Input 1 (DAT, optional): Conversation history (table with ‘role’, ‘message’, ‘id’, ‘timestamp’ columns).

- Input 2 (TOP): Image to be captioned.

- Output 1 (DAT): Conversation history with the latest user prompt and assistant response appended.

- Output 2 (DAT): Generated caption text only.

Parameters

Section titled “Parameters” Caption (Caption)

op('caption').par.Caption - Default:

false

Streaming (Streaming)

op('caption').par.Streaming - Default:

false

Active (Active)

op('caption').par.Active - Default:

false

Additional Prompt (Prompt)

op('caption').par.Prompt - Default:

"" (Empty String)

Add Prompt As User Message (Adduser)

op('caption').par.Adduser - Default:

false

Append Previous Conversation (Appendconversation)

op('caption').par.Appendconversation - Default:

false

Include Conversation Input (Includeinput)

op('caption').par.Includeinput - Default:

false

Add Pretext to Assistant (Addpretext)

op('caption').par.Addpretext - Default:

false

Pretext (Pretext)

op('caption').par.Pretext - Default:

"" (Empty String)

Output Settings (Outputsettings)

op('caption').par.Outputsettings - Default:

"" (Empty String)

Max Tokens (Maxtokens)

op('caption').par.Maxtokens - Default:

256

Temperature (Temperature)

op('caption').par.Temperature - Default:

0.0

Model Selection (Modelselectionheader)

op('caption').par.Modelselectionheader - Default:

"" (Empty String)

Use Model From (Modelselection)

op('caption').par.Modelselection - Default:

chattd_model

Controller [ Model ] (Modelcontroller)

op('caption').par.Modelcontroller - Default:

"" (Empty String)

Select API Server (Apiserver)

op('caption').par.Apiserver - Default:

openrouter

AI Model (Model)

op('caption').par.Model - Default:

llama-3.2-11b-vision-preview

Search (Search)

op('caption').par.Search - Default:

false

Model Search (Modelsearch)

op('caption').par.Modelsearch - Default:

"" (Empty String)

Bypass (Bypass)

op('caption').par.Bypass - Default:

false

Show Built-in Parameters (Showbuiltin)

op('caption').par.Showbuiltin - Default:

false

Version (Version)

op('caption').par.Version - Default:

"" (Empty String)

Last Updated (Lastupdated)

op('caption').par.Lastupdated - Default:

"" (Empty String)

Creator (Creator)

op('caption').par.Creator - Default:

"" (Empty String)

Website (Website)

op('caption').par.Website - Default:

"" (Empty String)

ChatTD Operator (Chattd)

op('caption').par.Chattd - Default:

"" (Empty String)

Requirements

Section titled “Requirements”- Requires a working TouchDesigner environment.

- Requires the

dot_chat_utillibrary,TDStoreTools, andTDFunctions. - Requires the ChatTD operator (specified in the

Chattdparameter) to be properly configured with API keys and model access.

API & Extension Methods

Section titled “API & Extension Methods”The SimpleCaptionEXT provides the following key methods accessible via op('your_caption_op').ext.SimpleCaptionEXT:

get_model_selection(): Determines theapi_serverandmodelbased on theModelselectionparameter. Returns(api_server, model).Caption(): The core method triggered by theCaptionpulse parameter. Assembles the request, callsChatTD.Customapicall, and manages the process.HandleStreamingResponse(response, full_response=None, callbackInfo=None): Callback method used whenStreamingis enabled. Processes response chunks.HandleResponse(response, full_response=None, callbackInfo=None): Callback method used whenStreamingis disabled. Processes the complete response.ErrorCustomapicall(error_response, full_response=None): Callback method for handling errors during the API call.ResetOp(): Clears internal tables (conversation_dat,history_dat,output_dat), resetsActivestate, and clears thePromptparameter.

Refer to the SimpleCaptionEXT code within the component for detailed implementation.