Handoff Operator

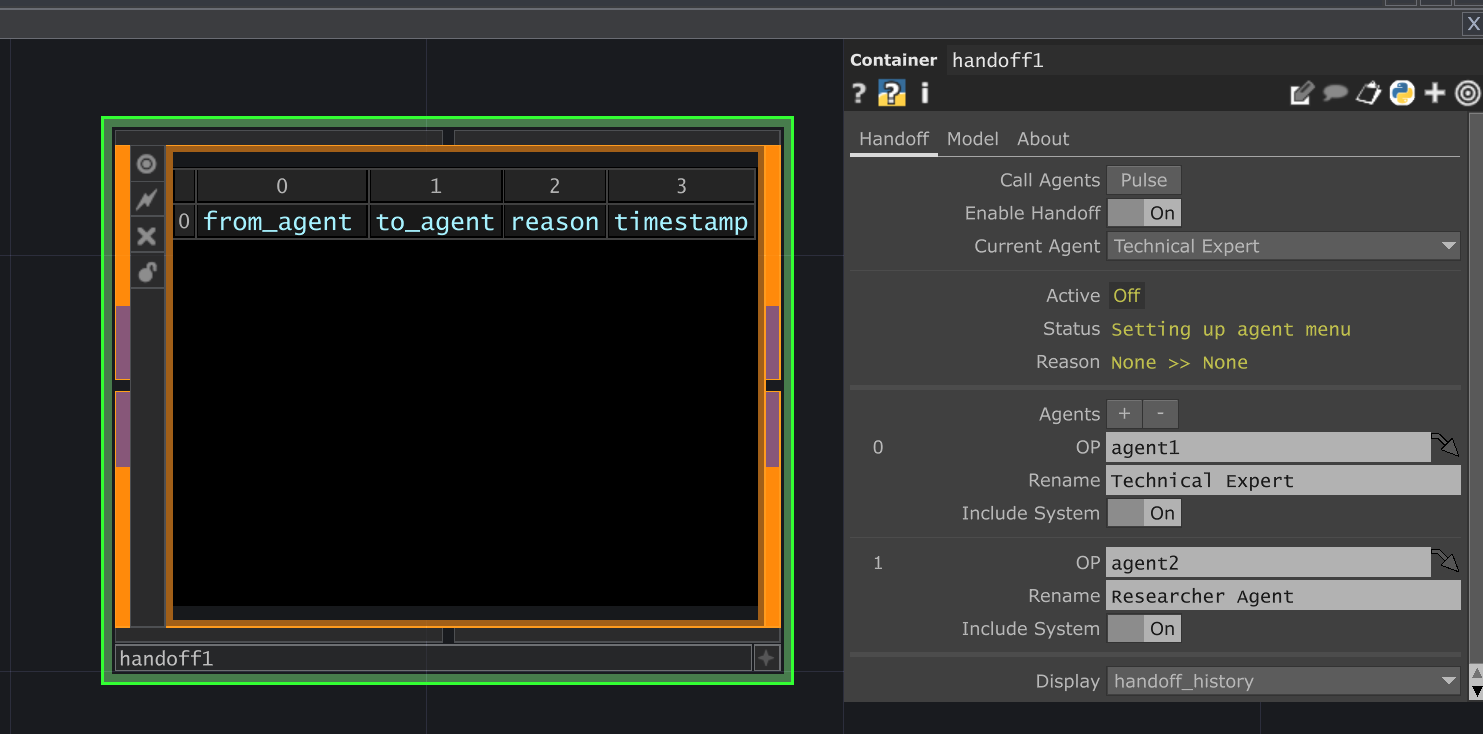

The Handoff operator acts as an intelligent router for conversations. It uses a configured Language Model (LLM) to analyze the ongoing discussion (provided via the input_table) and decide which specialized Agent operator, defined in its Agents sequence, is best suited to handle the next step.

This allows for dynamic and sophisticated workflows where different AI assistants can contribute based on their specific expertise or assigned roles. When handoff is disabled, it simply passes the conversation to a manually selected agent.

Parameters

Section titled “Parameters”Handoff Page

Section titled “Handoff Page”op('handoff').par.Call Pulse - Default:

None

op('handoff').par.Enablehandoff Toggle - Default:

false

op('handoff').par.Active Toggle - Default:

None

op('handoff').par.Status Str - Default:

None

op('handoff').par.Reason Str - Default:

None

op('handoff').par.Agents Sequence - Default:

None

Model Page

Section titled “Model Page”This page configures the LLM used by the Handoff operator itself to make the routing decisions when Enable Handoff is active. These settings do not affect the models used by the individual Agent operators defined in the sequence.

op('handoff').par.Outputsettings - Default:

None

op('handoff').par.Maxtokens - Default:

2048

op('handoff').par.Temperature - Default:

0.7

op('handoff').par.Modelselectionheader - Default:

None

op('handoff').par.Modelselection - Default:

chattd_model

op('handoff').par.Modelcontroller - Default:

None

op('handoff').par.Apiserver - Default:

openrouter

op('handoff').par.Model - Default:

llama-3.2-11b-vision-preview

op('handoff').par.Search - Default:

false

op('handoff').par.Modelsearch - Default:

None

op('handoff').par.Showmodelinfo - Default:

false

About Page

Section titled “About Page”op('handoff').par.Bypass Toggle - Default:

false

op('handoff').par.Showbuiltin Toggle - Default:

false

op('handoff').par.Version Str - Default:

None

op('handoff').par.Lastupdated Str - Default:

None

op('handoff').par.Creator Str - Default:

None

op('handoff').par.Website Str - Default:

None

op('handoff').par.Chattd OP - Default:

None

Usage Examples

Section titled “Usage Examples”Simple Routing

Section titled “Simple Routing”- Add two or more

AgentOPs to your network, each configured with a different System Prompt reflecting their specialty (e.g., one for creative writing, one for technical support). - In the

HandoffOP’sAgentssequence, add blocks linking to eachAgentOP. - Provide clear names in the

Renameparameter for each agent (e.g., “Creative Writer”, “Support Bot”). EnsureInclude Systemis On. - Connect your input conversation DAT (e.g., from a

ChatOP) to theHandoffOP’s input. - Ensure

Enable Handoffis On and theModelpage is configured with an LLM capable of function calling (like GPT-4, Claude 3, Gemini). - Pulse

Call Agents. TheHandoffOP will use its LLM to analyze the last message and the agent descriptions/system prompts, then route the conversation by calling the appropriateAgent. - The

Reasonparameter will show the LLM’s decision rationale.

Manual Agent Selection

Section titled “Manual Agent Selection”- Configure the

Agentssequence as above. - Turn

Enable HandofftoOff. - Select the desired target agent manually using the

Current Agentmenu. - Pulse

Call Agents. The conversation will be sent directly to the selected agent without LLM intervention.

Technical Notes

Section titled “Technical Notes”- The quality of the handoff decision heavily depends on the capability of the LLM selected on the

Modelpage and the clarity of theRenamestrings and includedSystem Promptsfor each agent in the sequence. - Ensure the LLM used for handoff supports function calling / tool use, as the routing mechanism relies on this.

- The

Handoffoperator manages the conversation flow but relies on the individualAgentoperators to generate the actual responses. - The

conversation_datviewer shows the state before the final agent response is added. The agent’s response is handled asynchronously via callbacks.