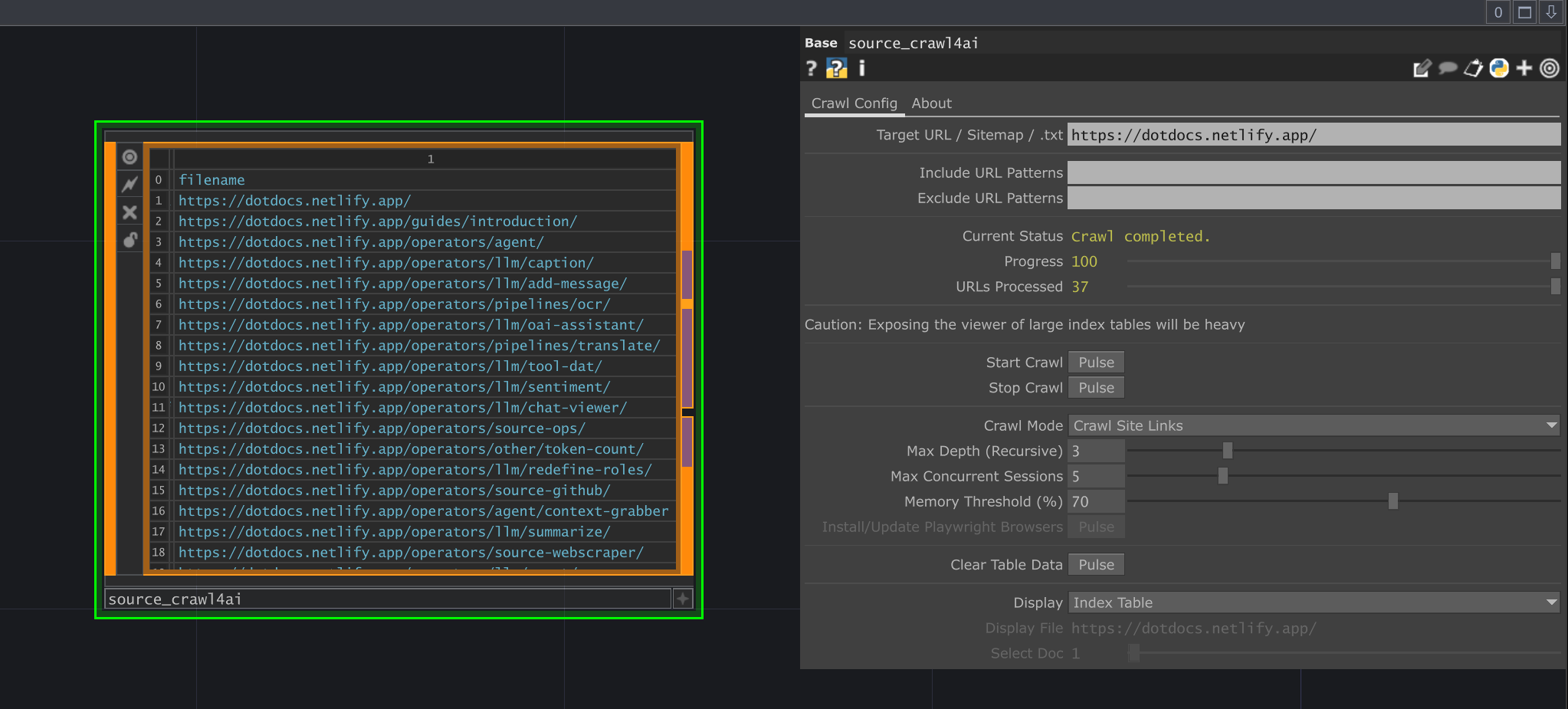

Source Crawl4ai Operator

Source Crawl4AI v1.3.0 [ September 2, 2025 ]

- Table input mode support

- Better deduplication algorithms

- Enhanced multi-agent source gathering

- Improved multiple source handling simultaneously

The Source Crawl4ai LOP utilizes the crawl4ai Python library to fetch content from web pages, sitemaps, or lists of URLs. It uses headless browsers (via Playwright) to render pages, extracts the main content, converts it to Markdown, and structures the output into a DAT table compatible with the Rag Index operator. It supports various crawling modes, URL filtering, and resource management features like concurrency limits and adaptive memory usage control.

Requirements

Section titled “Requirements”- Python Packages:

crawl4ai: The core crawling library.playwright: Required bycrawl4aifor browser automation.requests(implicitly needed for sitemap fetching).- These can be installed via the ChatTD operator’s Python manager by first installing

crawl4ai.

- Playwright Browsers: After installing the Python packages, the necessary browser binaries must be downloaded using the

Install/Update Playwright Browsersparameter on this operator. - ChatTD Operator: Required for dependency management (package installation) and asynchronous task execution. Ensure the

ChatTD Operatorparameter on the ‘About’ page points to your configured ChatTD instance.

Input/Output

Section titled “Input/Output”Inputs

Section titled “Inputs”None

Outputs

Section titled “Outputs”- Output Table (DAT): The primary output, containing the crawled content. Columns match the requirements for the Rag Index operator:

doc_id: Unique ID for the crawled page/chunk.filename: Source URL of the crawled page.content: Crawled content formatted as Markdown.metadata: JSON string containing source URL, timestamp, content length, etc.source_path: Source URL (duplicate offilename).timestamp: Unix timestamp of when the content was processed.

- Internal DATs: (Accessible via operator viewer)

index_table(summary view) andcontent_table(detailed view of selected doc). - Status/Log: Information is logged via the linked

Loggercomponent within ChatTD. Key status info is also reflected in theStatus,Progress, andURLs Processedparameters.

Parameters

Section titled “Parameters”Page: Crawl Config

Section titled “Page: Crawl Config”op('source_crawl4ai').par.Url Str - Default:

None

op('source_crawl4ai').par.Urltable OP - Default:

None

op('source_crawl4ai').par.Includepatterns Str - Default:

None

op('source_crawl4ai').par.Excludepatterns Str - Default:

None

op('source_crawl4ai').par.Status Str - Default:

None

op('source_crawl4ai').par.Progress Float - Default:

None

op('source_crawl4ai').par.Urlsprocessed Int - Default:

None

op('source_crawl4ai').par.Startcrawl Pulse - Default:

None

op('source_crawl4ai').par.Stopcrawl Pulse - Default:

None

op('source_crawl4ai').par.Clearontable Toggle - Default:

None

op('source_crawl4ai').par.Usehistory Toggle - Default:

None

op('source_crawl4ai').par.Clearhistory Pulse - Default:

None

op('source_crawl4ai').par.Maxdepth Int - Default:

2- Range:

- 1 to 10

op('source_crawl4ai').par.Maxconcurrent Int - Default:

5- Range:

- 1 to 20

op('source_crawl4ai').par.Memorythreshold Float - Default:

70.0- Range:

- 30 to 95

op('source_crawl4ai').par.Installplaywright Pulse - Default:

None

op('source_crawl4ai').par.Clearoutput Pulse - Default:

None

op('source_crawl4ai').par.Displayfile Str - Default:

None

op('source_crawl4ai').par.Selectdoc Int - Default:

1- Range:

- 1 to 1

Page: Agents

Section titled “Page: Agents”op('source_crawl4ai').par.Agenttotable Toggle - Default:

None

Page: About

Section titled “Page: About”op('source_crawl4ai').par.Bypass Toggle - Default:

None

op('source_crawl4ai').par.Showbuiltin Toggle - Default:

None

op('source_crawl4ai').par.Version Str - Default:

None

op('source_crawl4ai').par.Lastupdated Str - Default:

None

op('source_crawl4ai').par.Creator Str - Default:

None

op('source_crawl4ai').par.Website Str - Default:

None

op('source_crawl4ai').par.Chattd OP - Default:

None

op('source_crawl4ai').par.Clearlog Pulse - Default:

None

op('source_crawl4ai').par.Converttotext Toggle - Default:

None

Agent Tool Integration

Section titled “Agent Tool Integration”This operator exposes 2 tools that allow Agent and Gemini Live LOPs to crawl web pages and websites to extract content, supporting both single page crawling and full website recursive crawling for AI-driven content gathering.

Use the Tool Debugger operator to inspect exact tool definitions, schemas, and parameters.

The Source Crawl4ai LOP can be used as a tool by Agent LOPs, allowing an AI to autonomously crawl web pages and websites to gather information.

Available Tools

Section titled “Available Tools”When connected to an Agent, this operator provides the following functions:

crawl_single_page(url): Fetches and returns the text content of a single, specific web page. This is best used when the agent needs the contents of one exact URL.crawl_full_website_recursively(url, max_depth=2): Crawls an entire website by following internal links, starting from a given URL. It processes up to 20 pages to gather comprehensive information. This is ideal when an agent needs to understand the content of a whole website, not just a single page.

How It Works

Section titled “How It Works”- Connect to Agent: Add the Source Crawl4ai LOP to the

Toolsequence parameter on an Agent LOP. - Agent Prompts: When the Agent receives a prompt that requires web content, it can choose to call one of the crawl tools.

- Execution: The Source Crawl4ai LOP executes the crawl asynchronously and returns the extracted Markdown content to the Agent.

- Response: The Agent then uses this content to formulate its response.

Example Agent Prompt

Section titled “Example Agent Prompt”"Please summarize the main points from the article at https://example.com/news/latest-ai-breakthroughs and also give me an overview of the company's products from their website."In this scenario, the Agent could:

- Call

crawl_single_pagewith the URLhttps://example.com/news/latest-ai-breakthroughs. - Call

crawl_full_website_recursivelywith the URLhttps://example.com/products. - Use the content from both tool calls to generate a comprehensive summary and overview.

Usage Examples

Section titled “Usage Examples”Crawling a Single Page

Section titled “Crawling a Single Page”- Set ‘Target URL / Sitemap / .txt’ to the full URL (e.g., https://docs.derivative.ca/Introduction_to_Python).

- Set ‘Crawl Mode’ to ‘Single Page’.

- Pulse ‘Start Crawl’.

- Monitor ‘Status’ and view results in the Output Table DAT.

Crawling from a Sitemap

Section titled “Crawling from a Sitemap”- Set ‘Target URL / Sitemap / .txt’ to the EXACT URL of the sitemap (e.g., https://example.com/sitemap.xml).

- Set ‘Crawl Mode’ to ‘Sitemap Batch’.

- (Optional) Set ‘Include/Exclude URL Patterns’ to filter URLs from the sitemap.

- Adjust ‘Max Concurrent Sessions’ based on your system.

- Pulse ‘Start Crawl’.

- Monitor ‘Status’ and ‘Progress’.

Recursive Crawl of a Small Site Section

Section titled “Recursive Crawl of a Small Site Section”- Set ‘Target URL / Sitemap / .txt’ to the starting page (e.g., https://yoursite.com/documentation/).

- Set ‘Crawl Mode’ to ‘Crawl Site Links’.

- Set ‘Max Depth’ (e.g., 3). Be cautious with high values on large sites.

- (Optional) Set ‘Exclude URL Patterns’ to avoid specific sections (e.g., /blog /forum).

- Adjust ‘Max Concurrent Sessions’.

- Pulse ‘Start Crawl’.

Initial Setup (Installation)

Section titled “Initial Setup (Installation)”- Ensure the ‘ChatTD Operator’ parameter points to your ChatTD instance.

- Use ChatTD’s Python Manager to install the ‘crawl4ai’ package.

- Return to this operator. Pulse the ‘Install/Update Playwright Browsers’ parameter.

- Monitor the Textport for download progress. Installation is complete when the logs indicate success.

Technical Notes

Section titled “Technical Notes”- Dependencies: Requires

crawl4aiandplaywrightPython packages, installable via ChatTD. Crucially, Playwright also needs browser binaries downloaded via theInstall/Update Playwright Browsersparameter pulse. - Resource Usage: Crawling, especially in batch modes (

Sitemap,Recursive,Text File), uses headless browsers and can consume significant CPU, RAM, and network bandwidth. - Concurrency: Adjust

Max Concurrent Sessionscarefully. Too high can destabilize TouchDesigner or your system. - Memory Management: The

Memory Threshold (%)helps prevent crashes on large crawls by pausing new sessions when system RAM usage is high. - Filtering: Use

Include URL PatternsandExclude URL Patternseffectively to limit the scope of crawls and avoid unwanted pages or file types. Wildcards (*,?) are supported. - Output Format: Content is output as Markdown in the

contentcolumn of the output DAT, ready for ingestion by the Rag Index operator. - Stopping: Pulsing

Stop Crawlattempts a graceful shutdown, but currently active browser tasks might take time to fully terminate.

Related Operators

Section titled “Related Operators”- Rag Index: Ingests the output of this operator to create a searchable index.

- ChatTD: Provides core services like dependency management and asynchronous task execution required by this operator.

- Source Webscraper: An alternative web scraping operator using a different backend (

aiohttp,trafilatura). Might be lighter weight for simpler scraping tasks not requiring full browser rendering.